Posts tagged with 'testing'

Note from Matt: This is a guest post by James Bender for the Third Annual C# Advent.

For those who don’t know me, I’m that guy. You know the type; the ones who is super OCD about their code, stresses component-based architecture that encourages reuse, and who’s into Unit Testing. I think a lot of problems in the world could be solved if we all just practiced Test Driven Development.

So, when Matt reached out to me about writing this post, the first thing I thought of was unit testing! And so that’s what this post will be about!

Everyone: GROOOAAAANNN

Hey! When you all start unit testing, I’ll stop harping about it!

While I prefer the idea of TDD, I understand that it isn’t always an option. People who are just starting off with unit testing might not feel comfortable with the “test-first” paradigm, which is totally understandable and completely fine. Some people (like myself) often inherit codebases where the code is already there, but there is not a test to be found. Sometimes, if feels like it would take a miracle to make these code bases even testable!

Behold, a Christmas Miracle!

Well, I may be overselling the whole “miracle” aspect, but this will still be pretty cool. We’re going to take some code and refactor it to make it more testable.

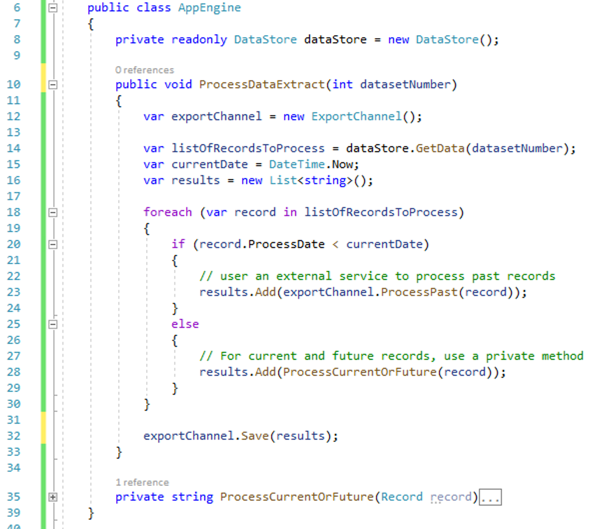

Here is our starting point:

This is an amalgamation of a lot of the problems I see with code that limit its testability. To be fair, this code as a lot of issues, but today we’ll just deal with the ones that limit its testability.

There are a variety of ways to deal with refactoring this, and not everyone will approach it the same way. In my case, the first thing I’m noticing is that the function we’ll be refactoring (ProcessDataExtract) doesn’t return a value. This can make it difficult to determine if my test passes; if I don’t have any output, how can I verify the method worked? Yeah, I could create a mock of the exportChannel object and ask it if its Save method was called, and what data it was called with. But this isn’t the best approach. I’m relying on a supporting object (the mock) to provide basic functionality that my test should be able to do without help. And what happens if there’s a change to ExportChannel causing me to change my mock? This makes this test difficult to maintain. So, for my first refactoring, I’ll be changing the return type:

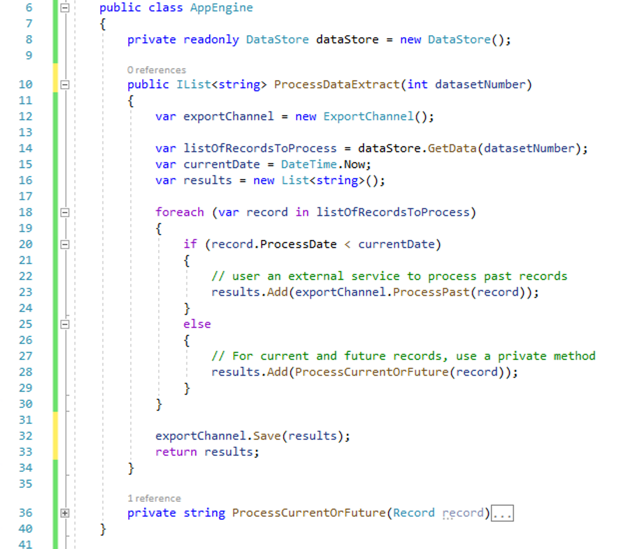

On line 10, I change the function signature to return an IList of type string. I added a return statement on line 33 to return the results list that is sent to the Save method of the ExportChannel. This enables me to interrogate the results list directly to verify my test. It also makes ProcessDataExtract itself more useable by being able to provide (return) data as opposed to seemingly swallow it up.

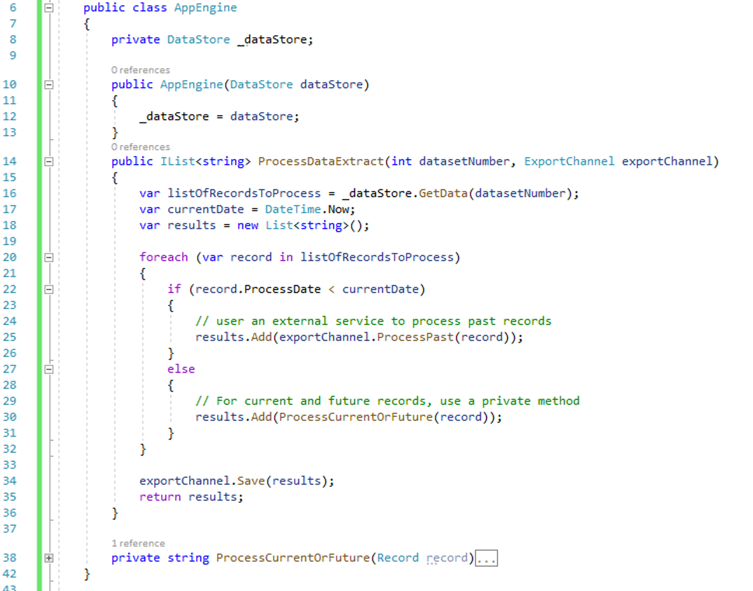

The next thing I notice (frankly, it’s kind of hard to miss), is that I have some static dependencies. On line 8 I have a class-level reference to the DataStore service, and at line 12 (in the method body) I have another one to ExportChannel. These have got to go!

Those of you who might be new to testing might be asking why this is a problem? You’ve probably seen dozens of tutorials showing this being done. The problem is that this creates a very brittle piece of software that cannot adopt well to change. If I wanted to add a constructor argument to DataStore I would have to make a change everywhere this service is invoked. That could be dozens, if not hundreds of places. Another issue, which has a direct impact to testability, is that I may eventually have a situation where I want to use different specialized versions of this service in different situations. Creating a direct static dependency to DataStore in this manner makes that almost impossible.

In a testing environment I don’t want to use the actual production implementation of these services. They may make my test slow. Things like I/O operations, web service calls, and database access are notoriously slow. These are crucial for running the application but make testing difficult.

Another concern is that when I write a test, I ONLY want to test the specific code in my method; not the code in the dependencies, which should have their own specific tests. To solve these issues, when testing this code, I want to use mocked versions of these services. Mock are basically stand-in objects that can return canned values that the code can use to verify its logic. Mocking can be a very involved topic, and so I won’t got into a deeper discussion here. If you would like to learn more, please see the links at the end of this post.

But, with statically bound dependencies, how do I get the code to use my mock objects instead of the real ones when I want to run a test? The answer is to refactor our code to use Dependency Injection:

In this refactoring, I have added a constructor to the AppEngine class which takes a DataStore as a parameter. The consumer of this class will pass in an instance of DataStore (or an object derived from DataStore), which my class can use. Additionally, I added parameter of type ExportChannel to the method ProcessDataExtract. This again requires that the calling method provide an object of type ExportChannel. This enables me to pass in mocked objects as part of my test; my test will be the consumer of the AppEngine class and will be calling the ProcessDataExtract method.

As alluded to above, this not only enables to me to pass-in mocked values for testing, but also allows me to pass in specialized versions of those classes based on whatever my current context is, which enhances code reusability. This is especially evident with the ProcessDataExtract method. I may need to run several types of extracts with this method that would only very by the type of ExportChannel. Now, I can reuse this method and pass in whatever ExportChannel I want. This is similar to the Strategy Pattern, which you can read more about here.

So, this method is already looking a lot better. You might even think that we’re done, and there’s no way we can improve the testability of this method. But there’s still one problem. It’s subtle.

Do you see it?

Hint: What happens if I run this test now, and then run it again in an hour? Am I guaranteed to get the same results?

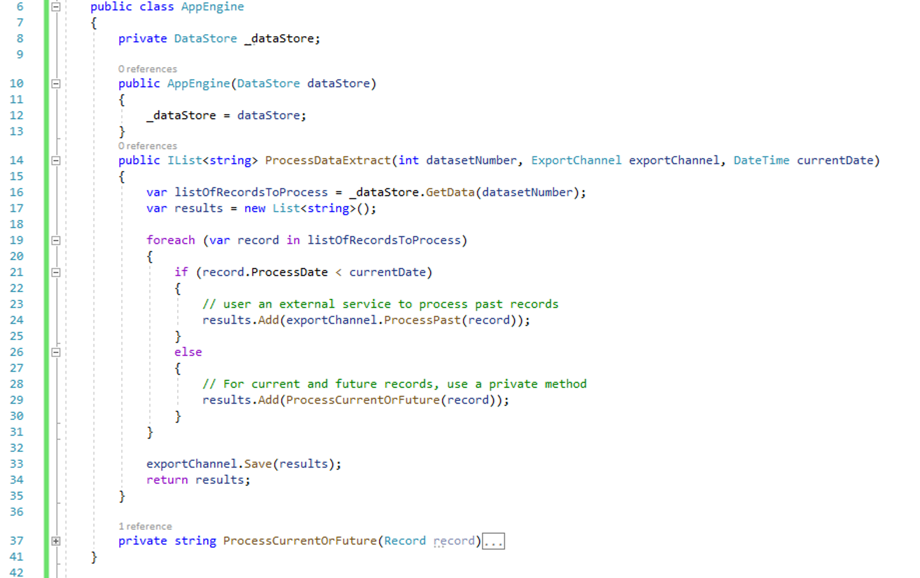

Look at line 17. This line creates a problem for me. The ProcessDataExtract function sorts records based on the current time. If I am using a mock of DataService to pass in a static, canned set of records the code is going to compare the current time captured on line 17 to the time in my test records. Time goes on, but my test data stands still, and eventually, my results are going to change, which could cause my test to act unpredictably.

When creating testable code, a good practice is to abstract away anything that might change. In this case, the value of DateTime.Now changes constantly. So, we need to take it out of our equation, at least for testing:

To solve this issue, I have removed the call to DateTime.Now on line 17 and made currentDate a parameter for the ProcessDataExtract function. This means that when I run my tests, I can pass in any date I want, which means I will be getting consistent test results. Like the previous refactor, it also enhances the reusability of this method. I’m no longer bound to the current date. I can run it for past dates and future dates without having to create a separate method.

And that’s it! With three easy-ish steps we took a piece of untestable legacy code and made it testable! Now, I know this was small example. But the concepts don’t care about the size of the code; there may be more work to do for some of these in larger code bases, but you will still be able to use these techniques to handle most refactoring situations.

Shameless plug: I you would like to learn more, please check out my book, which is available on Amazon.

Happy Holidays!

Dennis Stepp is prioritizing tests based on risk.

This episode is not sponsored! Want to be a sponsor? You can contact me or check out my sponsorship gig on Fiverr

Show Notes:

-

The four factors of risk based analytis: Domain, risks, impact, likelihood

-

I threw out the term systemic risk

-

Books:

-

Clean Code by Robert C. Martin

-

The Phoenix Project by Jean Kim

-

A Seat at the Table by Mark Schwartz

-

Making Work Visible by Dominica Degrandis

-

Working Effectively with Legacy Code by Michael Feathers

-

Want to be on the next episode? You can! All you need is the willingness to talk about something technical.

This is a repost that originally appeared on the Couchbase Blog: Performance Testing and Load Testing Couchbase with Pillowfight.

Performance testing and load testing are important processes to help you make sure you are production ready. For testing Couchbase Server clusters, there is an open-source command line utility called "cbc-pillowfight". It’s part of libcouchbase.

Before you begin

You’ll need a Couchbase Server cluster up and running. You can try it out directly on your local machine (download Couchbase for Linux, Windows, and Mac) or in a Docker container.

If you’re just trying out pillowfight, you may want to create a bucket on your cluster just for that purpose. I created a bucket called "pillow".

After you have Couchbase Server installed, you’ll need to download and install libcouchbase:

-

Mac:

brew install libcouchbase -

Windows: download a zip file (latest at the time of writing is libcouchbase-2.8.1)

For more information, including Linux instructions, check out the libcouchbase release notes.

Pillow fight for Performance Testing

If you used homebrew to install on a Mac, you can type cbc-pillowfight --help straight away for the command line help screen.

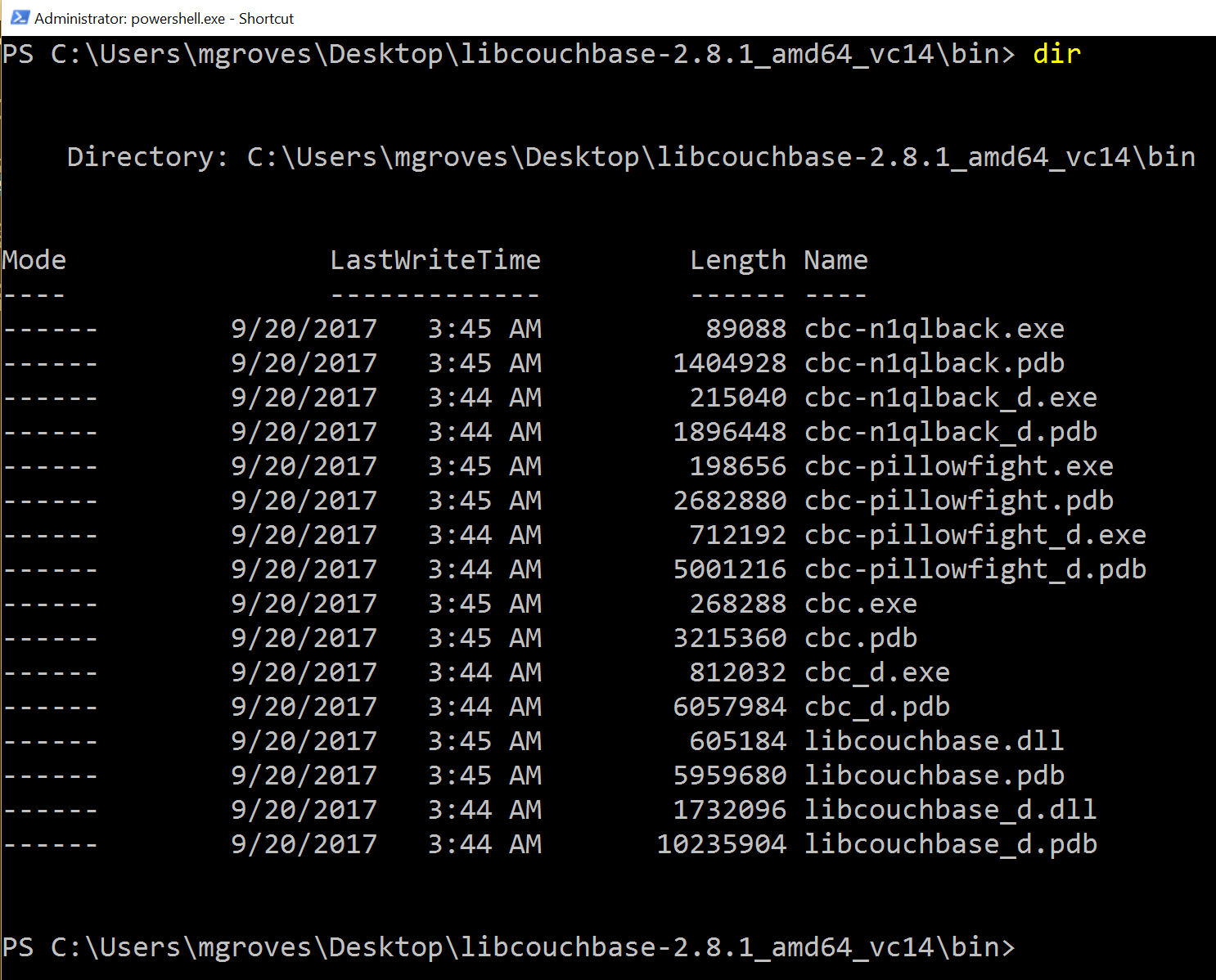

On Windows, unzip the libcouchbase zip file wherever you’d like. You’ll find cbc-pillowfight.exe in the bin folder.

The simplest pillowfight you can run is:

.\cbc-pillowfight.exe -U couchbase://localhost/pillow -u Administrator -P password

This is for a Windows Powershell command line, but it will be very similar on other OSes.

A pillow fight will start for the cluster running on your local machine (localhost), with the "Administrator" user that has a password of "password" (your username and password may be different).

You should see a message like "Thread 0 has finished populating".

What is a pillow fight?

At this point, the pillowfight is going to start creating, updating, and reading documents from the "pillow" bucket. It’s going to do all these operations ("ops") according to the command line settings you specify (or fall back to the defaults).

For instance, with the -I flag, you can specify how many total documents you want to operate on. The default is 1000. So, if you run the above command, you will soon see 1000 documents show up in the pillow bucket.

It doesn’t just create 1000 documents and quit. Pillowfight will keep "getting" and "updating" those documents until you terminate the process. It’s called a "pillowfight" because it will put your Couchbase Cluster into battle (with actual exertion), but it’s really more of a battle simulation.

While the fight is happening, you can monitor bucket statistics to see how your cluster is performing under load.

As I type this, the fan on my laptop is whirring to life as I stress test the single node Couchbase cluster that I’ve installed on it. (I suspect my home desktop would create a much more impressive set of charts, but I am traveling a lot this month).

There are a lot of statistics available for you to look at on a bucket level. Check out the Couchbase Server documentation on Monitoring Statistics for more details.

Options for performance testing

The default pillowfight settings may not be optimal for the type of application that you’ll be using with Couchbase. There are many ways to adjust your pillow fight to make it better fit your use cases. For the full list of options, type cbc-pillowfight --help at the command line.

But here are some notable options you might want to try out:

-

-Ior--num-itemswith a number, to specify how many documents you want to operate on. -

--jsonto use JSON payloads in the documents. By default, documents are created with non-JSON payloads, but you may want to have real JSON documents in order to test other aspects of performance while the pillow fight is running. -

-eto expire documents after a certain period of time. If you are using Couchbase as a cache or short term storage, you will want to use this setting to monitor the effect of documents expiring. -

--subdocto use the subdocument API. Not every operation will need to be on an entire document. -

-Mor--max-sizeto set a ceiling on the size of the documents. You may want to adjust this to tailor a more realistic document size for your system. There’s a corresponding-mand--min-sizetoo.

Here’s another example using the above options:

.\cbc-pillowfight.exe -U couchbase://localhost/pillow -u Administrator -P password -I 10000 --json -e 10 --subdoc -M 1024

This will start a pillowfight using 10000 JSON documents, that expire after 10 seconds, uses the sub-document API, and has a max document size of 1024 bytes.

Note: there is a -t --num-threads option. Currently, if you're using Windows (like me), you are limited to a single thread (see this code).

Summary

Couchbase is committed to performance. We do extensive performance testing to make sure that we are delivering the speed you expect. Check out recent blog posts on our Plasma storage engine and N1QL enhancements. But no one knows your use case and infrastructure better than you. With pillowfight, you have a tool to help you do performance testing, load testing, and stress testing.

Thanks go out to Sergey Avseyev for helping with this blog post, and his contributions to libcouchbase.

Please reach out with questions on Couchbase by leaving a comment below or finding me on Twitter @mgroves.

Eric Elliott is increasing code quality by leveraging pure functions and fast feedback.

Editors note: sorry for the audio quality; I had to use the Skype audio recording, and it's not as good as I'd like. But it's too good of an episode to just throw out!

Show Notes:

- I mentioned Episode 056: Jeremy Clark on Convincing Your Boss on Unit Testing

- The Outrageous Cost of Skipping TDD and Code Reviews

- Five Questions Every Unit Test Must Answer

- JavaScript Testing: Unit vs Functional vs Integration Tests

- TDD the RITE Way

- How to Build a High Velocity Development Team

- Tools mentioned:

- DevAnywhere - online mentorship

Eric Elliott is on Twitter. Special thanks to JS Cheerleader, who set up this interview!

Want to be on the next episode? You can! All you need is the willingness to talk about something technical.

Theme music is "Crosscutting Concerns" by The Dirty Truckers, check out their music on Amazon or iTunes.

Jeremy Clark is writing unit tests.

Show Notes:

- David Neal was a guest on the podcast, episode 010

- Regression tests - Change Code Without Fear

- Code coverage (NCover is a tool that reports on code coverage for .NET code)

- TDD and BDD and ATDD

- WPF and XML, MVVM

- Book: The Art of Unit Testing, by Roy Osherove

- Jeremy's website, Jeremy Bytes

- Jeremy Clark on Pluralsight

- Jeremy Clark on YouTube

Want to be on the next episode? You can! All you need is the willingness to talk about something technical.

Theme music is "Crosscutting Concerns" by The Dirty Truckers, check out their music on Amazon or iTunes.