{

"type": "fulltext-index",

"name": "hotels",

"sourceType": "couchbase",

"sourceName": "travel-sample",

// ... snip ...

}Posts tagged with 'or'

This is a repost that originally appeared on the Couchbase Blog: Authentication and Authorization with RBAC.

In March’s developer build, you can start to see some major changes to authentication and authorization within Role Based Access Control (RBAC) coming to Couchbase Server. These changes are a work in progress: the developer build is essentially a nightly build that gets released to the public. But there’s some good stuff in RBAC that’s worth getting excited about!

Go download the March 5.0.0 developer release of Couchbase Server today. Make sure to click the "Developer" tab to get the developer build (DB), and check it out. You still have time to give us some feedback before the official release.

Keep in mind that I’m writing this blog post on early builds, and some things may change in minor ways by the time you get the release, and some things may still be buggy.

Authentication and Authorization

Just a quick reminder of the difference between authentication and authorization:

-

Authentication is the process of identifying that a user is who they say they are.

-

Authorization is the process of making sure the user has permission to do what they are trying to do.

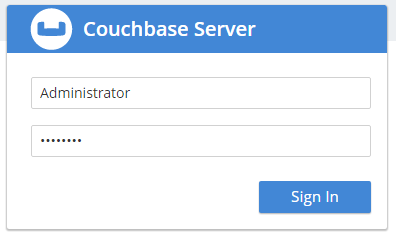

If you’ve used Couchbase before, you’re familiar with the login to what we sometimes call the "Admin Web Console".

However, the Web Console is really not just for admins, it’s for developers too. But until now, you didn’t really have a lot of control built-in to Couchbase about who can log in and (more importantly) what they’re allowed to do.

So, I’d like to introduce you to Couchbase’s new first-class user feature.

Users

There’s still a full administrator user. This is the login that you create when you first install Couchbase. This is the user who is unrestricted, and can do anything, including creating new users. So, for instance, a full administrator can see the "Security" link in the navigation, while other users can’t.

Now, once on this security page, you can add, edit, and delete users.

A user can identify a person, but it can also identify some service or process. For instance, if you’re writing an ASP.NET application, you may want to create a user with a limited set of permissions called "web-service". Therefore, the credentials for that "user" would not be for a person, but for an ASP.NET application.

Next, try adding a new Couchbase user by clicking "+ Add User". I’m going to create a user called "fts_admin", with a name of "Full Text Search Admin", a password, and a single role: FTS Admin of the travel-sample bucket (FTS = Full Text Search).

Adding a new User

Here’s an animation of adding that user:

Some notes about the above animation:

-

I selected "Couchbase" instead of "External". External is meant for LDAP integration. Note that "Couchbase" (internal authentication) will likely become the default in future releases.

-

FTS Admin gives the user permission to do everything with Full Text Searches: create, modify, delete, and execute them.

-

I granted FTS Admin only for the travel-sample bucket. If I selected "all", that would grant permission to all buckets, even ones created in the future.

-

Users with the FTS Searcher role only have access to execute searches, not modify or create them.

More on the difference between FTS Admin and FTS Searcher later.

Logging in as a new user

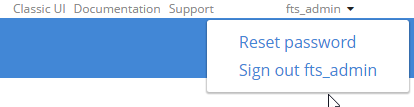

Now that this user is created, I can login as fts_admin. This user’s authentication is handled within Couchbase.

First, in the above animation, note that the fts_admin user has a much more limited set of options compared to the full admin user.

Next, it’s worth pointing out that users can reset their password:

Creating an FTS index

Since I’ve already created an fts_admin user with the FTS Admin role, I’ll create another user called fts_searcher that only has the FTS Searcher role for the travel-sample bucket.

Using the REST API for FTS

I’m going to use the REST API to demonstrate that these users are limited by the roles I’ve given them. If you need a refresher on the REST API, you can refer to the documentation of the Full Text Search API. Also note that I’m using the REST API because there are some bugs in the UI as I’m writing this.

Create an FTS index

To create an index with the REST API, I need to make a PUT request to the /api/index/<indexname> endpoint.

-

First, I’ll create an index for the 'hotel' type in the travel-sample bucket, so I’ll PUT to

/api/index/hotels -

Also, credentials can be put in the URL to use basic authentication

-

Furthermore, the REST endpoints are available on port 8094

Finally, the URL for the PUT request should look something like this:

The body of the PUT is a big JSON object. Below is part of it. You can find the full version on GitHub to try for yourself.

Normally, you can create this via the UI instead of having to create JSON by hand. I’m not going to go into FTS in much detail in this post, because my goal is to demonstrate the new authentication and authorization features, not FTS itself.

Trying to create an index without authorization

Notice that I’m using fts_searcher as the user. I know that fts_searcher shouldn’t have permission to create indexes, so I would expect a 403. And that’s just what I get.

{

"message": "Forbidden. User needs one of the following permissions",

"permissions": [

"cluster.bucket[travel-sample].fts!write"

]

}So, while the authentication worked, that user doesn’t have the necessary authorization.

Creating an index with authorization

I’ll try again with fts_admin:

And assuming an index named 'hotels' doesn’t already exist, you’ll get a 200, and this in the body of response:

{

"status": "ok"

}Using the FTS index

Next, let’s use the REST API to search the index for the word 'breakfast'.

First, make a POST to the /api/index/hotels/query endpoint, again with the proper credentials and port number.

or

Both users should be able to execute a search using that index.

Next, in the body of the POST should be a simple JSON object. Again, you don’t normally have to create this by hand — your SDK of choice or the Web Console UI can do this for you.

{

"explain": true,

"fields": [

"*"

],

"highlight": {},

"query": {

"query": "breakfast"

}

}Finally, the result of this search request will be a large JSON response. Look within the "hits" sub-document for "fragments" to verify that the search worked. Here’s a snippet of my search for "breakfast". Again, the full result is on Github.

// ... snip ...

"reviews.content": [

"… to watch TV. <mark>Breakfast</mark> was served every morning along with a copy of the Times-Picayune. I took my <mark>breakfast</mark> downstairs in the patio, the coffee was very good. The continental <mark>breakfast</mark> is nothing to…"

]

},

// ... snip ...This is a preview, expect some bugs!

There are some bugs and some incomplete features.

-

I’ve shown FTS roles here on purpose. This is because the other roles are not yet fully formed. Please try them out, let us know what you think, but remember they are not in their final form. FTS is closest to ready.

-

I’ve seen some issues when logging in as a non-admin user causes the web console to behave badly. Because of this, I showed the REST example above instead of relying on the UI.

-

Finally, there might be other bugs that we don’t know about yet. Please let us know! You can file an issue in our JIRA system at issues.couchbase.com or submit a question on the Couchbase Forums. Or, contact me with a description of the issue. I would be happy to help you or submit the bug for you (my Couchbase handlers send me a cake pop when I submit a good bug).

If you have questions, the best way to contact me is either Twitter @mgroves or email me matthew.groves@couchbase.com.

Jeremy Miller is the creator of Storyteller.

This episode was recorded at CodeMash 2017 in a massive dining room, so the audio is a bit different than normal.

Show Notes:

- Check out Storyteller

- Book: Specification by Example by Gojko Adzic

Want to be on the next episode? You can! All you need is the willingness to talk about something technical.

Theme music is "Crosscutting Concerns" by The Dirty Truckers, check out their music on Amazon or iTunes.

This is a repost that originally appeared on the Couchbase Blog: New Profiling and Monitoring in Couchbase Server 5.0 Preview.

N1QL query monitoring and profiling updates are just some of goodness you can find in February’s developer preview release of Couchbase Server 5.0.0.

Go download the February 5.0.0 developer release of Couchbase Server today, click the "Developer" tab, and check it out. You still have time to give us some feedback before the official release.

As always, keep in mind that I’m writing this blog post on early builds, and some things may change in minor ways by the time you get the release.

What is profiling and monitoring for?

When I’m writing N1QL queries, I need to be able to understand how well (or how badly) my query (and my cluster) is performing in order to make improvements and diagnose issues.

With this latest developer version of Couchbase Server 5.0, some new tools have been added to your N1QL-writing toolbox.

N1QL Writing Review

First, some review.

There are multiple ways for a developer to execute N1QL queries.

-

Use the SDK of your choice.

-

Use the cbq command line tool.

-

Use the Query Workbench in Couchbase Web Console

-

Use the REST API N1QL endpoints

In this post, I’ll be mainly using Query Workbench.

There are two system catalogs that are already available to you in Couchbase Server 4.5 that I’ll be talking about today.

-

system:active_request - This catalog lists all the currently executing active requests or queries. You can execute the N1QL query

SELECT * FROM system:active_requests;and it will list all those results. -

system:completed_requests - This catalog lists all the recent completed requests (that have run longer than some threshold of time, default of 1 second). You can execute

SELECT * FROM system:completed_requests;and it will list these queries.

New to N1QL: META().plan

Both active_requests and completed_requests return not only the original N1QL query text, but also related information: request time, request id, execution time, scan consistency, and so on. This can be useful information. Here’s an example that looks at a simple query (select * from `travel-sample`) while it’s running by executing select * from system:active_requests;

{

"active_requests": {

"clientContextID": "805f519d-0ffb-4adf-bd19-15238c95900a",

"elapsedTime": "645.4333ms",

"executionTime": "645.4333ms",

"node": "10.0.75.1",

"phaseCounts": {

"fetch": 6672,

"primaryScan": 7171

},

"phaseOperators": {

"fetch": 1,

"primaryScan": 1

},

"phaseTimes": {

"authorize": "500.3µs",

"fetch": "365.7758ms",

"parse": "500µs",

"primaryScan": "107.3891ms"

},

"requestId": "80787238-f4cb-4d2d-999f-7faff9b081e4",

"requestTime": "2017-02-10 09:06:18.3526802 -0500 EST",

"scanConsistency": "unbounded",

"state": "running",

"statement": "select * from `travel-sample`;"

}

}First, I want to point out that phaseTimes is a new addition to the results. It’s a quick and dirty way to get a sense of the query cost without looking at the whole profile. It gives you the overall cost of each request phase without going into detail of each operator. In the above example, for instance, you can see that parse took 500µs and primaryScan took 107.3891ms. This might be enough information for you to go on without diving into META().plan.

However, with the new META().plan, you can get very detailed information about the query plan. This time, I’ll execute SELECT *, META().plan FROM system:active_requests;

[

{

"active_requests": {

"clientContextID": "75f0f401-6e87-48ae-bca8-d7f39a6d029f",

"elapsedTime": "1.4232754s",

"executionTime": "1.4232754s",

"node": "10.0.75.1",

"phaseCounts": {

"fetch": 12816,

"primaryScan": 13231

},

"phaseOperators": {

"fetch": 1,

"primaryScan": 1

},

"phaseTimes": {

"authorize": "998.7µs",

"fetch": "620.704ms",

"primaryScan": "48.0042ms"

},

"requestId": "42f50724-6893-479a-bac0-98ebb1595380",

"requestTime": "2017-02-15 14:44:23.8560282 -0500 EST",

"scanConsistency": "unbounded",

"state": "running",

"statement": "select * from `travel-sample`;"

},

"plan": {

"#operator": "Sequence",

"#stats": {

"#phaseSwitches": 1,

"kernTime": "1.4232754s",

"state": "kernel"

},

"~children": [

{

"#operator": "Authorize",

"#stats": {

"#phaseSwitches": 3,

"kernTime": "1.4222767s",

"servTime": "998.7µs",

"state": "kernel"

},

"privileges": {

"default:travel-sample": 1

},

"~child": {

"#operator": "Sequence",

"#stats": {

"#phaseSwitches": 1,

"kernTime": "1.4222767s",

"state": "kernel"

},

"~children": [

{

"#operator": "PrimaryScan",

"#stats": {

"#itemsOut": 13329,

"#phaseSwitches": 53319,

"execTime": "26.0024ms",

"kernTime": "1.3742725s",

"servTime": "22.0018ms",

"state": "kernel"

},

"index": "def_primary",

"keyspace": "travel-sample",

"namespace": "default",

"using": "gsi"

},

{

"#operator": "Fetch",

"#stats": {

"#itemsIn": 12817,

"#itemsOut": 12304,

"#phaseSwitches": 50293,

"execTime": "18.5117ms",

"kernTime": "787.9722ms",

"servTime": "615.7928ms",

"state": "services"

},

"keyspace": "travel-sample",

"namespace": "default"

},

{

"#operator": "Sequence",

"#stats": {

"#phaseSwitches": 1,

"kernTime": "1.4222767s",

"state": "kernel"

},

"~children": [

{

"#operator": "InitialProject",

"#stats": {

"#itemsIn": 11849,

"#itemsOut": 11848,

"#phaseSwitches": 47395,

"execTime": "5.4964ms",

"kernTime": "1.4167803s",

"state": "kernel"

},

"result_terms": [

{

"expr": "self",

"star": true

}

]

},

{

"#operator": "FinalProject",

"#stats": {

"#itemsIn": 11336,

"#itemsOut": 11335,

"#phaseSwitches": 45343,

"execTime": "6.5002ms",

"kernTime": "1.4157765s",

"state": "kernel"

}

}

]

}

]

}

},

{

"#operator": "Stream",

"#stats": {

"#itemsIn": 10824,

"#itemsOut": 10823,

"#phaseSwitches": 21649,

"kernTime": "1.4232754s",

"state": "kernel"

}

}

]

}

}, ...

]The above output comes from the Query Workbench.

Note the new "plan" part. It contains a tree of operators that combine to execute the N1QL query. The root operator is a Sequence, which itself has a collection of child operators like Authorize, PrimaryScan, Fetch, and possibly even more Sequences.

Enabling the profile feature

To get this information when using cbq or the REST API, you’ll need to turn on the "profile" feature.

You can do this in cbq by entering set -profile timings; and then running your query.

You can also do this with the REST API on a per request basis (using the /query/service endpoint and passing a querystring parameter of profile=timings, for instance).

You can turn on the setting for the entire node by making a POST request to http://localhost:8093/admin/settings, using Basic authentication, and a JSON body like:

{

"completed-limit": 4000,

"completed-threshold": 1000,

"controls": false,

"cpuprofile": "",

"debug": false,

"keep-alive-length": 16384,

"loglevel": "INFO",

"max-parallelism": 1,

"memprofile": "",

"pipeline-batch": 16,

"pipeline-cap": 512,

"pretty": true,

"profile": "timings",

"request-size-cap": 67108864,

"scan-cap": 0,

"servicers": 32,

"timeout": 0

}Notice the profile setting. It was previously set to off, but I set it to "timings".

You may not want to do that, especially on nodes being used by other people and programs, because it will affect other queries running on the node. It’s better to do this on a per-request basis.

It’s also what Query Workbench does by default.

Using the Query Workbench

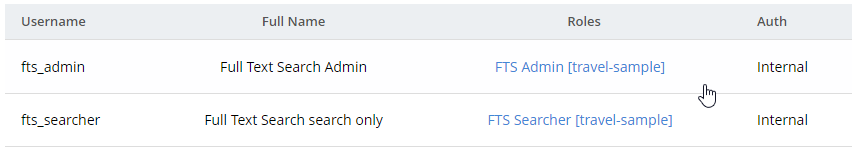

There’s a lot of information in META().plan about how the plan is executed. Personally, I prefer to look at a simplified graphical version of it in Query Workbench by clicking the "Plan" icon (which I briefly mentioned in a previous post about the new Couchbase Web Console UI).

Let’s look at a slightly more complex example. For this exercise, I’m using the travel-sample bucket, but I have removed one of the indexes (DROP INDEX `travel-sample.def_sourceairport;`).

I then execute a N1QL query to find flights between San Francisco and Miami:

SELECT r.id, a.name, s.flight, s.utc, r.sourceairport, r.destinationairport, r.equipment

FROM `travel-sample` r

UNNEST r.schedule s

JOIN `travel-sample` a ON KEYS r.airlineid

WHERE r.sourceairport = 'SFO'

AND r.destinationairport = 'MIA'

AND s.day = 0

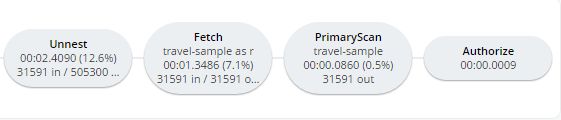

ORDER BY a.name;Executing this query (on my single-node local machine) takes about 10 seconds. That’s definitely not an acceptible amount of time, so let’s look at the plan to see what the problem might be (I broke it into two lines so the screenshots will fit in the blog post).

Looking at that plan, it seems like the costliest parts of the query are the Filter and the Join. JOIN operations work on keys, so they should normally be very quick. But it looks like there are a lot of documents being joined.

The Filter (the WHERE part of the query) is also taking a lot of time. It’s looking at the sourceairport and destinationairport fields. Looking elsewhere in the plan, I see that there is a PrimaryScan. This should be a red flag when you are trying to write performant queries. PrimaryScan means that the query couldn’t find an index other than the primary index. This is roughly the equivalent of a "table scan" in relational database terms. (You may want to drop the primary index so that these issues get bubbled-up faster, but that’s a topic for another time).

Let’s add an index on the sourceairport field and see if that helps.

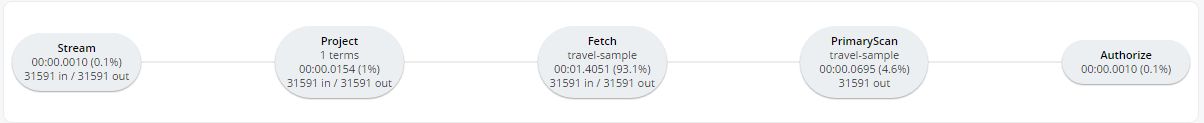

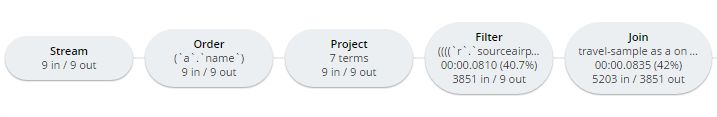

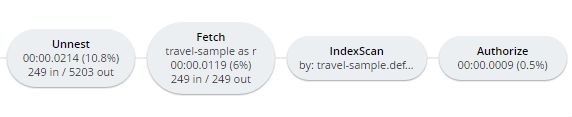

CREATE INDEX `def_sourceairport` ON `travel-sample`(`sourceairport`);Now, running the same query as above, I get the following plan:

This query took ~100ms (on my single-node local machine) which is much more acceptible. The Filter and the Join still take up a large percentage of the time, but thanks to the IndexScan replacing the PrimaryScan, there are many fewer documents that those operators have to deal with. Perhaps the query could be improved even more with an additional index on the destinationairport field.

Beyond Tweaking Queries

The answer to performance problems is not always in tweaking queries. Sometimes you might need to add more nodes to your cluster to address the underlying problem.

Look at the PrimaryScan information in META().plan. Here’s a snippet:

"~children": [

{

"#operator": "PrimaryScan",

"#stats": {

"#itemsOut": 13329,

"#phaseSwitches": 53319,

"execTime": "26.0024ms",

"kernTime": "1.3742725s",

"servTime": "22.0018ms",

"state": "kernel"

},

"index": "def_primary",

"keyspace": "travel-sample",

"namespace": "default",

"using": "gsi"

}, ... ]The servTime value indicates how much time is spent by the Query service to wait on the Key/Value data storage. If the servTime is very high, but there is a small number of documents being processed, that indicates that the indexer (or the key/value service) can’t keep up. Perhaps they have too much load coming from somewhere else. So this means that something weird is running someplace else or that your cluster is trying to handle too much load. Might be time to add some more nodes.

Similarly, the kernTime is how much time is spent waiting on other N1QL routines. This might mean that something else downstream in the query plan has a problem, or that the query node is overrun with requests and are having to wait a lot.

We want your feedback!

The new META().plan functionality and the new Plan UI combine in Couchbase Server 5.0 to improve the N1QL writing and profiling process.

Stay tuned to the Couchbase Blog for information about what’s coming in the next developer build.

Interested in trying out some of these new features? Download Couchbase Server 5.0 today!

We want feedback! Developer releases are coming every month, so you have a chance to make a difference in what we are building.

Bugs: If you find a bug (something that is broken or doesn’t work how you’d expect), please file an issue in our JIRA system at issues.couchbase.com or submit a question on the Couchbase Forums. Or, contact me with a description of the issue. I would be happy to help you or submit the bug for you (my Couchbase handlers high-five me every time I submit a good bug).

Feedback: Let me know what you think. Something you don’t like? Something you really like? Something missing? Now you can give feedback directly from within the Couchbase Web Console. Look for the ![]() icon at the bottom right of the screen.

icon at the bottom right of the screen.

In some cases, it may be tricky to decide if your feedback is a bug or a suggestion. Use your best judgement, or again, feel free to contact me for help. I want to hear from you. The best way to contact me is either Twitter @mgroves or email me matthew.groves@couchbase.com.

- Data modeling

- The data itself (this blog post)

- Applications using the data

Data Types in JSON vs SQL

| SQL Server | JSON |

|---|---|

|

nvarchar, varchar, text |

string |

|

int, float, decimal, double |

number |

|

bit |

boolean |

|

null |

null |

|

XML/hierarchyid fields |

array / object |

Migrating and translating data

- Give yourself plenty of time in planning. While migrating, you may discover that you need to rethink your model. You will need to test and make adjustments, and it’s better to have extra time than make mistakes while hurrying. Migrating data is an iterative cycle: migrate a table, see if that works, adjust, and keep iterating. You may have to go through this cycle many times.

- Test your migration using real data. Data can be full of surprises. You may think that NVARCHAR field only ever contains string representations of numbers, but maybe there are some abnormal rows that contain words. Use a copy of the real data to test and verify your migration.

- Be prepared to run the migration multiple times. Have a plan to cleanup a failed migration and start over. This might be a simple

DELETE FROM bucketin N1QL, or it could be a more nuanaced and targeted series of cleanups. If you plan from the start, this will be easier. Automate your migration, so this is less painful. - ETL or ELT? Extract-Transform-Load, or Extract-Load-Transform. When are you going to do a transform? When putting data into Couchbase, the flexibility of JSON allows you to transfer-in-place after loading if you choose.

An example ETL migration

ShoppingCartMigrator class and a SocialMediaMigrator class. I’m only going to cover the shopping cart in this post. I pass it a Couchbase bucket and the Entity Framework context that I used in the last blog post. (You could instead pass an NHibernate session or a plain DbConnection here, depending on your preference).public class ShoppingCartMigrator

{

readonly IBucket _bucket;

readonly SqlToCbContext _context;

public ShoppingCartMigrator(IBucket bucket, SqlToCbContext context)

{

_bucket = bucket;

_context = context;

}

}Go method to perform the migration, and a Cleanup method to delete any documents created in the migration, should I choose to.Go method, I let Entity Framework do the hard work of the joins, and loop through every shopping cart.public bool Go()

{

var carts = _context.ShoppingCarts

.Include(x => x.Items)

.ToList();

foreach (var cart in carts)

{

var cartDocument = new Document<dynamic>

{

Id = cart.Id.ToString(),

Content = MapCart(cart)

};

var result = _bucket.Insert(cartDocument);

if (!result.Success)

{

Console.WriteLine($"There was an error migrating Shopping Cart {cart.Id}");

return false;

}

Console.WriteLine($"Successfully migrated Shopping Cart {cart.Id}");

}

return true;

}public void Cleanup()

{

Console.WriteLine("Delete all shopping carts...");

var result = _bucket.Query<dynamic>("DELETE FROM `sqltocb` WHERE type='ShoppingCart';");

if (!result.Success)

{

Console.WriteLine($"{result.Exception?.Message}");

Console.WriteLine($"{result.Message}");

}

}DELETE FROM sqltocb WHERE fingerprint = '999cfbc3-186e-4219-ab5d-18ad130a9dc6'). Or vice versa: fingerprint the problematic data for later analysis and delete the rest. Just make sure to cleanup these temporary fields when the migration is completed successfully.What about the other features of SQL Server?

- SQL/N1QL

- Stored Procedures

- Service tiers

- Triggers

- Views

- Serialization

- Security

- Concurrency

- Autonumber

- OR/Ms and ODMs

- Transactions

Summary

This is a repost that originally appeared on the Couchbase Blog: Moving from SQL Server to Couchbase Part 1: Data Modeling.

In this series of blog posts, I’m going to lay out the considerations when moving to a document database when you have a relational background. Specifically, Microsoft SQL Server as compared to Couchbase Server.

In three parts, I’m going to cover:

-

Data modeling (this blog post)

-

The data itself

-

Applications using the data

The goal is to lay down some general guidelines that you can apply to your application planning and design.

If you would like to follow along, I’ve created an application that demonstrates Couchbase and SQL Server side-by-side. Get the source code from GitHub, and make sure to download a developer preview of Couchbase Server.

Why would I do this?

Before we get started, I want to spend a little bit of time on motivation. There are 3 main reasons why one might consider using a document data store instead of (or in addition to) a relational database. Your motivation may be one or all three:

-

Speed: Couchbase Server uses a memory-first architecture which can provide a great speed boost as compared to a relational databases

-

Scalability: Couchbase Server is a distributed database, which allows you to scale out (and scale back in) capacity by just racking up commodity hardware. Built-in Couchbase features like auto-sharding, replication, load balancing make scaling a lot smoother and easier than relational databases.

-

Flexibility: Some data fits nicely in a relational model, but some data can benefit from the flexibility of using JSON. Unlike SQL Server, schema maintenance is no longer an issue. With JSON: the schema bends as you need it to.

For these reasons and others, Gannett switched from SQL Server to Couchbase Server. If you are considering this, definitely check out Gannett’s full presentation.

It should be noted that document databases and relational databases can be complimentary. Your application may be best served by one, the other, or a combination of both. In many cases, it simply is not possible to completely remove relational databases from your design, but a document database like Couchbase Server can still bring the above benefits to your software. The rest of this blog series will assume you have a SQL Server background and are either replacing, supplimenting, or starting a new greenfield project using Couchbase.

The ease or difficulty of transitioning an existing application varies widely based on a number of factors. In some cases it may be extremely easy; in some cases it will be time-consuming and difficult; in some (shrinking number of) cases it may not even be a good idea.

Understanding the differences

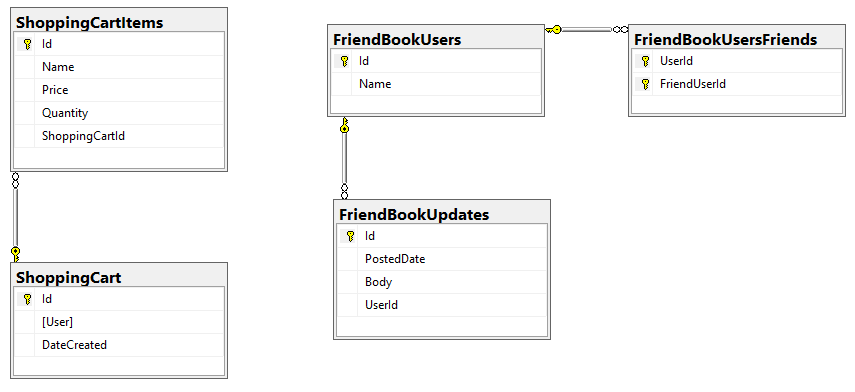

The first step is to understand how data is modeled in a document database. In a relational database, data is typically stored flat in a table and it is given structure with primary and foreign keys. As a simple example, let’s consider a relational database for a web site that has a shopping cart as well as social media features. (In this example, those features are unrelated to keep things simple).

In a document database, data is stored as keys and values. A Couchbase bucket contains documents; each document has a unique key and a JSON value. There are no foreign keys (or, more accurately, there are no foreign key constraints).

Here’s a high-level comparison of SQL Server features/naming as compared to Couchbase:

| SQL Server | Couchbase Server |

|---|---|

|

Server |

Cluster |

|

Database |

Bucket |

|

Row(s) from table(s) |

Document |

|

Column |

JSON key/value |

|

Primary Key |

Document Key |

These comparisons are a metaphorical starting point. Looking at that table, it might be tempting to take a simplistic approach. "I have 5 tables, therefore I’ll just create 5 different types of documents, with one document per row." This is the equivalent of literally translating a written language. The approach may work sometimes, but it doesn’t take into account the full power of a document database that uses JSON. Just as a literal translation of a written language doesn’t take into account cultural context, idioms, and historical context.

Because of the flexibility of JSON, the data in a document database can be structured more like a domain object in your application. Therefore you don’t have an impedence mismatch that is often addressed by OR/M tools like Entity Framework and NHibernate.

There are two main approaches you can use when modeling data in Couchbase that we will examine further:

-

Denormalization - Instead of splitting data between tables using foreign keys, group concepts together into a single document.

-

Referential - Concepts are given their own documents, but reference other documents using the document key.

Denormalization example

Let’s consider the "shopping cart" entity.

To represent this in a relational database would likely require two tables: a ShoppingCart table and a ShoppingCartItem table with a foreign key to a row in ShoppingCart.

When creating the model for a document database, the decision has to be made whether to continue modeling this as two separate entities (e.g. a Shopping Cart document and corresponding Shopping Cart Item documents) or whether to "denormalize" and combine a row from ShoppingCart and row(s) from ShoppingCartItem into a single document to represent a shopping cart.

In Couchbase, using a denormalization strategy, a shopping cart and the items in it would be represented by a single document.

{

"user": "mgroves",

"dateCreated": "2017-02-02T15:28:11.0208157-05:00",

"items": [

{

"name": "BB-8 Sphero",

"price": 80.18,

"quantity": 1

},

{

"name": "Shopkins Season 5",

"price": 59.99,

"quantity": 2

}

],

"type": "ShoppingCart"

}Notice that the relationship between the items and the shopping cart is now implicit to being contained in the same document. No more need for an ID on the items to represent a relationship.

In C#, you would likely define ShoppingCart and Item classes to model this data:

public class ShoppingCart

{

public Guid Id { get; set; }

public string User { get; set; }

public DateTime DateCreated { get; set; }

public List<Item> Items { get; set; }

}

public class Item

{

public Guid Id { get; set; } // necessary for SQL Server, not for Couchbase

public string Name { get; set; }

public decimal Price { get; set; }

public int Quantity { get; set; }

}These classes would still make sense with Couchbase, so you can reuse them or design them this way. But with a relational database, this design does not match up in a direct way.

Hence the need for OR/Ms like NHibernate or Entity Framework. The way the above model can be mapped to a relational database is represented in Entity Framework* like this:

public class ShoppingCartMap : EntityTypeConfiguration<ShoppingCart>

{

public ShoppingCartMap()

{

this.HasKey(m => m.Id);

this.ToTable("ShoppingCart");

this.Property(m => m.User);

this.Property(m => m.DateCreated);

this.HasMany(m => m.Items)

.WithOptional()

.HasForeignKey(m => m.ShoppingCartId);

}

}

public class ShoppingCartItemMap : EntityTypeConfiguration<Item>

{

public ShoppingCartItemMap()

{

this.HasKey(m => m.Id);

this.ToTable("ShoppingCartItems");

this.Property(m => m.Name);

this.Property(m => m.Price);

this.Property(m => m.Quantity);

}

}*Other OR/Ms will have similar mappings

Based on these mappings and an analysis of the use cases, I could decide that it would be modeled as a single document in Couchbase. ShoppingCartItemMap only exists so that the OR/M knows how to populate the Items property in ShoppingCart. Also, it’s unlikely that the application will be doing reads of the shopping cart without also needing to read the items.

In a later post, OR/Ms will be discussed further, but for now I can say that the ShoppingCartMap and ShoppingCartItemMap classes are not necessary when using Couchbase, and the Id field from Item isn’t necessary. In fact, the Couchbase .NET SDK can directly populate a ShoppingCart object without an OR/M in a single line of code:

public ShoppingCart GetCartById(Guid id)

{

return _bucket.Get<ShoppingCart>(id.ToString()).Value;

}This isn’t to say that using Couchbase will always result in shorter, easier to read code. But for certain use cases, it can definitely have an impact.

Referential example

It’s not always possible or optimal to denormalize relationships like the ShoppingCart example. In many cases, a document will need to reference another document. Depending on how your application expects to do reads and writes, you may want to keep your model in separate documents by using referencing.

Let’s look at an example where referencing might be the best approach. Suppose your application has some social media elements. Users can have friends, and users can post text updates.

One way to model this:

-

Users as individual documents

-

Updates as individual documents that reference a user

-

Friends as an array of keys within a user document

With two users, two updates, we would have 4 documents in Couchbase that look like this:

[

// Key: "7fc5503f-2092-4bac-8c33-65ef5b388f4b"

{

"friends": [

"c5f05561-9fbf-4ab0-b68f-e392267c0703"

],

"name": "Matt Groves",

"type": "User"

},

// Key: "c5f05561-9fbf-4ab0-b68f-e392267c0703"

{

"friends": [ ],

"name": "Nic Raboy",

"type": "User"

},

// Key: "5262cf62-eb10-4fdd-87ca-716321405663"

{

"body": "Nostrum eligendi aspernatur enim repellat culpa.",

"postedDate": "2017-02-02T16:19:45.2792288-05:00",

"type": "Update",

"user": "7fc5503f-2092-4bac-8c33-65ef5b388f4b"

},

// Key: "8d710b83-a830-4267-991e-4654671eb14f"

{

"body": "Autem occaecati quam vel. In aspernatur dolorum.",

"postedDate": "2017-02-02T16:19:48.7812386-05:00",

"type": "Update",

"user": "c5f05561-9fbf-4ab0-b68f-e392267c0703"

}

]I decided to model 'friends' as a one-way relationship (like Twitter) for this example, which is why Matt Groves has Nic Raboy as a friend but not vice-versa. (Don’t read too much into this, Nic :).

The way to model this in C# could be:

public class FriendbookUser

{

public Guid Id { get; set; }

public string Name { get; set; }

public virtual List<FriendbookUser> Friends { get; set; }

}

public class Update

{

public Guid Id { get; set; }

public DateTime PostedDate { get; set; }

public string Body { get; set; }

public virtual FriendbookUser User { get; set; }

public Guid UserId { get; set; }

}The Update to FriendbookUser relationship can be modeled as either a Guid or as another FriendbookUser object. This is an implementation detail. You might prefer one, the other, or both, depending on your application needs and/or how your OR/M works. In either case, the underlying model is the same.

Here’s the mapping I used for these classes in Entity Framework. Your mileage may vary, depending on how you use EF or other OR/M tools. Focus on the underlying model and not the details of the OR/M mapping tool.

public class UpdateMap : EntityTypeConfiguration<Update>

{

public UpdateMap()

{

this.HasKey(m => m.Id);

this.ToTable("FriendBookUpdates");

this.Property(m => m.Body);

this.Property(m => m.PostedDate);

this.HasRequired(m => m.User)

.WithMany()

.HasForeignKey(m => m.UserId);

}

}

public class FriendbookUserMap : EntityTypeConfiguration<FriendbookUser>

{

public FriendbookUserMap()

{

this.HasKey(m => m.Id);

this.ToTable("FriendBookUsers");

this.Property(m => m.Name);

this.HasMany(t => t.Friends)

.WithMany()

.Map(m =>

{

m.MapLeftKey("UserId");

m.MapRightKey("FriendUserId");

m.ToTable("FriendBookUsersFriends");

});

}

}If, instead of storing these entities as separate documents, we applied the same denormalization as the shopping cart example and attempted to store a user and updates in one document, we would end up with some problems.

-

Duplication of friends: each user would store the details for their friends. This is not tenable, because now a user’s information would be stored in multiple places instead of having a single source of truth (unlike the shopping cart, where having the same item in more than one shopping cart probably doesn’t make any domain sense). This might be okay when using Couchbase as a cache, but not as a primary data store.

-

Size of updates: Over a period of regular use, an individual user could post hundreds or thousands of updates. This could lead to a very large document which could slow down I/O operations. This can be mitigated with Couchbase’s sub-document API, but also note that Couchbase has a ceiling of 20mb per document.

Note: There’s an N+1 problem here too (friends of friends, etc), but I’m not going to spend time on addressing that. It’s a problem that’s not unique to either database.

Additionally, it may not be the case that when the application reads or writes a user that it will need to read or write friends & updates. And, when writing an update, it’s not likely that the application will need to update a user. Since these entities may often be read/written on their own, that indicates that they need to be modeled as separate documents.

Note the array in the Friends field in the user document and the value in the User field in the update document. These values can be used to retrieve the associated documents. Later in this post, I’ll discuss how to do it with key/value operations and how to do it with N1QL.

To sum up, there are two ways to model data in a document database. The shopping cart example used nested objects, while the social media example used separate documents. In those examples, it was relatively straightforward to choose. When you’re making your own modeling decisions, here’s a handy cheat sheet:

| If … | Then consider… |

|---|---|

|

Relationship is 1-to-1 or 1-to-many |

Nested objects |

|

Relationship is many-to-1 or many-to-many |

Separate documents |

|

Data reads are mostly parent fields |

Separate document |

|

Data reads are mostly parent + child fields |

Nested objects |

|

Data reads are mostly parent or child (not both) |

Separate documents |

|

Data writes are mostly parent and child (both) |

Nested objects |

Key/value operations

To get document(s) in Couchbase, the simplest and fastest way is to ask for them by key. Once you have one of the FriendbookUser documents above, you can then execute another operation to get the associated documents. For instance, I could ask Couchbase to give me the documents for keys 2, 3, and 1031 (as a batch operation). This would give me the documents for each friend. I can then repeat that for Updates, and so on.

The benefit to this is speed: key/value operations are very fast in Couchbase, and you will likely be getting values directly from RAM.

The drawback is that it involves at least two operations (get FriendbookUser document, then get the Updates). So this may involve some extra coding. It may also require you to think more carefully about how you construct document keys (more on that later).

N1QL

In Couchbase, you have the ability to write queries using N1QL, which is SQL for JSON. This includes the JOIN keyword. This allows me to, for instance, write a query to get the 10 latest updates and the users that correspond to them.

public List<Update> GetTenLatestUpdates()

{

var n1ql = @"SELECT up.body, up.postedDate, { 'id': META(u).id, u.name} AS `user`

FROM `sqltocb` up

JOIN `sqltocb` u ON KEYS up.`user`

WHERE up.type = 'Update'

ORDER BY STR_TO_MILLIS(up.postedDate) DESC

LIMIT 10;";

var query = QueryRequest.Create(n1ql);

query.ScanConsistency(ScanConsistency.RequestPlus);

var result = _bucket.Query<Update>(query);

return result.Rows;

}The result of this query would be:

[

{

"body": "Autem occaecati quam vel. In aspernatur dolorum.",

"postedDate": "2017-02-02T16:19:48.7812386-05:00",

"user": {

"id": "c5f05561-9fbf-4ab0-b68f-e392267c0703",

"name": "Bob Johnson"

}

},

{

"body": "Nostrum eligendi aspernatur enim repellat culpa eligendi maiores et.",

"postedDate": "2017-02-02T16:19:45.2792288-05:00",

"user": {

"id": "7fc5503f-2092-4bac-8c33-65ef5b388f4b",

"name": "Steve Oberbrunner"

}

},

// ... etc ...

]N1QL allows you to have great flexibility in retrieving data. I don’t have to be restricted by just using keys. It’s also easy to pick up, since it’s a superset of SQL that SQL Server users will be comfortable with quickly. However, the tradeoff here is that indexing is important. Even more so than SQL Server indexing. If you were to write a query on the Name field, for instance, you should have an index like:

CREATE INDEX IX_Name ON `SocialMedia` (Name) USING GSI;Otherwise the query will fail to execute (if you have no indexing) or it will not be performant (if you only have a primary index created).

There are pros and cons in deciding to use referencing or not. The values in friends and user are similar to foreign keys, in that they reference another document. But there is no enforcement of values by Couchbase. The management of these keys must be handled properly by the application. Further, while Couchbase provides ACID transactions for single document operations, there is no multi-document ACID transaction available.

There are ways to deal with these caveats in your application layer that will be discussed further in later blog posts in this series, so stay tuned!

Key design and document differentiation

In relational databases, rows of data (typically, not always) correspond to a primary key, which is often an integer or a Guid, and sometimes a composite key. These keys don’t necessarily have any meaning: they are just used to identify a row within a table. For instance, two rows of data in two different tables may have the same key (an integer value of 123, for instance), but that doesn’t necessarily mean the data is related. This is because the schema enforced by relational databases often conveys meaning on its own (e.g. a table name).

In document databases like Couchbase, there isn’t anything equivalent to a table, per se. Each document in a bucket must have a unique key. But a bucket can have a variety of documents in it. Therefore, it’s often wise to come up with a way to differentiate documents within a bucket.

Meaningful keys

For instance, it’s entirely possible to have a FriendbookUser document with a key of 123, and an Update document with a key of 456. However, it might be wise to add some more semantic information to the key. Instead of 123, use a key of FriendbookUser::123. The benefits to putting semantic information in your key include:

-

Readability: At a glance, you can tell what a document is for.

-

Referenceability: If you have a

FriendbookUser::123document, then you could have another document with a keyFriendbookUser::123::Updatesthat has an implicit association.

If you plan on using N1QL, then you may not need keys to be this semantically meaningful. In terms of performance, the shorter the key is, the more of them can be stored in RAM. So only use this pattern if you plan on making heavy use of key/value operations instead of N1QL queries.

Discriminator fields

When using N1QL, another tactic that can be used in addition to or instead of meaningful keys is to add field(s) to a document that are used to differentiate the document. This is often implemented as a type field within a document.

{

"address" : "1800 Brown Rd",

"city" : "Groveport",

"state" : "OH",

"type" : "address"

}There’s nothing magical about the type field. It’s not a reserved word within a document and it’s not treated specially by Couchbase Server. It could just as easily be named documentType, theType, etc. But it can be useful within your application when using N1QL to query documents of a certain kind.

SELECT d.*

FROM `default` d

WHERE d.type = 'address'You may even take it a step further and add an embedded object to your documents to act as a kind of faux 'meta data':

{

"address" : "1800 Brown Rd",

"city" : "Groveport",

"state" : "OH",

"documentInfo" : {

"type" : "address",

"lastUpdated" : "1/29/2017 1:31:10 PM",

"lastUpdatedBy" : "mgroves"

}

}That may be overkill for some applications. It’s similar to a pattern I’ve seen in relational databases: a 'root' table to simulate inheritence within a relational database, or perhaps the same fields tacked on to every table.

Conclusion of part 1

This blog post covered data modeling using denormalization, data modeling using referencing, key design, and discriminating fields. Modeling data in a document database is a thought process, something of an art form, and not a mechanical process. There is no prescription on how to model your data in a document database: it depends greatly on how your application interacts with your data.

You can get the source code for the entire blog series on GitHub now, parts of which were featured in this blog post. If you have questions about various parts of that code, feel free to leave a comment below, or open an issue on GitHub.

Stay tuned for the next blog in the series, where data and data migration will be discussed.

If you have any questions, please leave a comment below, contact me on Twitter, or use the Couchbase Forums.